What are you doing, Dave?

AI is just a tool, like social media is just a tool. So long as we allow AI to rest in the hands of the same people that created our online dystopia, we shouldn't be surprised to find ourselves living in a more tangible version of the hell they created.

If you go looking for instances of AI driven catastrophe there’s no shortage of examples. Similarly, if you go looking for predictions of AI destroying humanity, there’s a fair few of those out there too. But how should we make sense of these risks, and what, if anything, can we do to mitigate them?

Let’s start by dividing our risk landscape into three horizons: Risks that will emerge in the short-term, those that will emerge in the mid-term and those we don’t expect to experience until further into the future. We’ll consider these long-term horizon risks first.

Long-term risks: AI will destroy humanity (with help from our vanity and greed).

There are a number of proponents of the theory that AI will end us all, most notably perhaps Steven Hawking. But he is far from being the only one, Michael Cohen, one of the authors of last year’s Google / Oxford paper on the subject, summarised their findings in a tweet; “Under the conditions we have identified, our conclusion is much stronger than that of any previous publication — an existential catastrophe is not just possible, but likely,”

We’ve not yet decided exactly how AI will end humanity, perhaps we create ‘Misaligned Agents’ that compete with us for resources, or perhaps we plug it into too many critical systems, and it has a bad day. But either way, expert opinion is coalescing around the idea that this is how we die. Also, fun fact, if you have school age children, they are likely to be alive when all this happens.

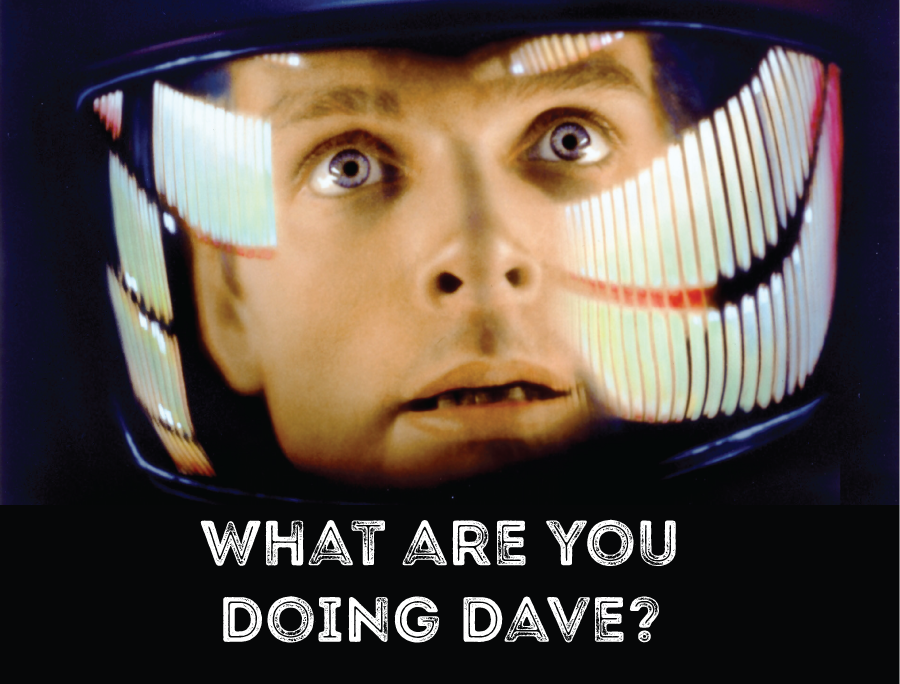

It’s tempting to anthropomorphise AI, and most public discourse treats AI as a thing in its own right, rather than a set of choices and actions. We prefer to see this threat as an external problem, something excitingly alien, rather than just one more example of the consequences of hubris. AI is just a tool, like social media is just a tool. So long as we allow AI to rest in the hands of the same people that created our online dystopia, we shouldn't be surprised to find ourselves living in a more tangible version of the hell they created.

It’s comforting to assume that wiser heads than ours are engaged in mitigating this imminent existential threat. And yet, only around $50 million was spent on reducing catastrophic risks from AI in 2020 — while billions were spent advancing AI capabilities. While we are seeing increasing concern from AI experts, there are still only around 400 people working directly on reducing the chances of an AI-related existential catastrophe. Why are so few people working on saving the world and so many working on progressing the technology? Money.

There is a disconnect between the reality, “billionaires will destroy the world by making things we don’t need”, and the narrative, “AI is our unavoidable nemesis”, that has moved our own destruction into a pseudo-passive voice: “AI will destroy humanity”. This is a lie. Human greed will destroy humanity, by using AI for personal, rather than collective, gain.

Perhaps we should try and stop it.

Mid-Term risks: AI will damage our institutions and nations (with help from our prejudice and corruption).

Training AIs requires data, and some data is better than other data. For example an article in the Financial Times will probably be a better source of information on the impact of an interest rate change than a comment under a facebook post. According to research by Epoch AI we’re going to run out of high quality data in 2026 (yep, three years from now). That leaves us with only low quality data and what we call ‘synthetic data’ to train our AIs. Synthetic data is made by AI’s pretending to be people. Who will decide who these pretend people are? Will we get a vote? Will there be a law stating they have to be representative of all of us? Or will this just play out like everything else? A technically illiterate political class unable or unwilling to hold technology to account. In this scenario those not granted a synthetic voice will be invisible, in a world that has been engineered to exclude them.

As more and more aspects of our lives are affected by AIs we can expect to see a surge in AI targeting hackers. Data Poisoning is a technique where training data is corrupted, making AIs either useless or behave in unwanted ways. We see this all the time in attempts to hack email spam filters and train them to believe a particular form of spam is legitimate traffic. We also see AI ransomware detectors under consistent data poisoning attacks. It takes a comparatively small amount of data to corrupt a training model and, given the black box nature of AI execution, it is very hard to detect.

Even the ownership of this high quality data is under threat. As the UK government considers legislation to exempt AI companies from copyright law, in order to allow them to harvest our creativity without permission, compensation or accreditation. What role then for art and artists? Does all this human endeavour exist only as an exemplar? Are we just the seed data?

Sam Altman, CEO of Open AI, pointed out that we had always thought the next technological revolution would do away with manual, untrained labour first and only in the distant future have any impact on the lives of creatives. The exact opposite has happened. Turns out that robot doers are harder to make than robot thinkers.

Will this data crisis create a ghastly AI mirror, reflecting our prejudices, and empowering our destructive urges? A mirror trained on billions of ill-considered interactions; an all powerful child that does as we do, not as we say.

The next great battle will be the battle of data. How we side and how we fight will determine the nature of the societies we live in.

Short-term risks: AI will damage our businesses (with help from our ignorance and negligence).

The short-term risks of AI are self-evident. We’ve seen AI’s wipe data from systems they were only supposed to be reading from, we’ve seen self-driving Ubers plough through red lights, and the US FTC has issued warnings to businesses about privacy, safety and unsubstantiated claims of AI capability.

A lot of these issues come down to our basic laziness. AI’s still behave for the most part as a black box. Where traditionally engineered code can give a full account of its methodology, given that methodology was specifically created for the task, AI technology is more opaque. This is our first big risk. The current preference for Generative Pretrained Transformer AIs (GPTs) means that, for most users, the process of determining an answer is hidden. Combine this with the fact that GPTs will create credible, if erroneous, answers in pretty much all cases, and you can see how easily a bad outcome could occur.

Another prevalent risk is ‘Ungoverned AI development’. If you were to ask the CEO’s of business in tech adjacent industries if their teams were creating any AI technology, they’d probably be able to tell you. And in many cases the answer would be yes, a lot of companies have started exploring this technology. But if you asked them if they were certain those AIs were being developed in ways that reflected the values of their business and their customers, they may find that harder to answer. Because of a lack of AI literacy among business leaders and decision-makers, many executives may be aware of the potential benefits of AI, but they often lack a deep understanding of the underlying technology and its limitations.The reputational damage done by eager but ungoverned developers training AIs on close at hand data will be significant, and, for now at least, seemingly inevitable.

All this is not to say that ‘doing nothing’ is the safest course of action. AI is a transformational enabling technology. If a company chooses to ignore it out of a fear of risk, they will be left behind by their less timid competitors.

Reason to be cheerful...

Assuming we don’t want our businesses to collapse under reputational and economic chaos, our institutions and discourse to be overwhelmed with synthetic hate, and our species to be wiped out, what should we do?

The first thing we can do is recognise that we are not passengers on this journey. As businesses we have more power than individuals, not as much power as governments, but power nonetheless. We need to engage with the development of AI and set out clear objectives and values for ourselves and, by influence, others.

Voodoo will soon launch its AI Constitution, outlining our beliefs on AI and its potential for benefiting humanity. We're committed to ensuring AI development is safe, ethical, and mindful, and we encourage other businesses to do the same. By embracing AI's potential responsibly, we can shape a better future for all.

In response to the growing need for responsible AI development, we have created our own GPT-based AI technology, designed to deliver safe, ethical, and mindful AI solutions for businesses. Our AI platform prioritises transparency, accountability, and a strong commitment to ethical principles, ensuring that AI-driven innovations benefit society as a whole. By focusing on the responsible integration of AI into business operations, we aim to empower organisations to harness the transformative potential of AI without compromising their values, customers' trust, or the greater good. With our GPT-based AI technology, businesses can confidently embrace the future of AI, knowing that they are contributing to a more equitable and sustainable world.

We'll be launching later this year... Want to know more? Say hello